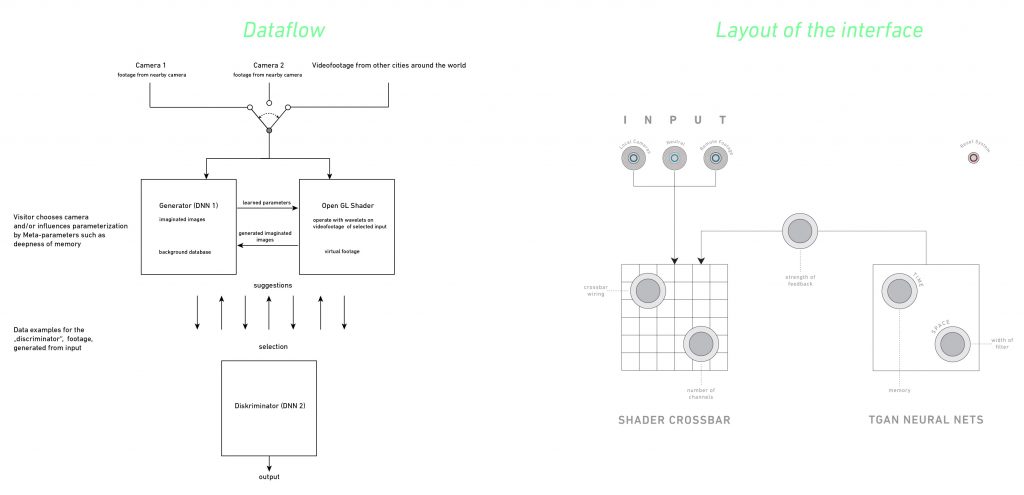

Membrane is an art installation which was produced as the main work of a similarly named exhibition at the Kunstverein Tiergarten in Berlin early 2019. It builds on a series of generative video installations with real time video input. Membrane allows the viewer to interact directly with the generation of the image by a neural network, here the so-called TGAN algorithm. An interface allows to experience the ‘imagination’ of the computer, guiding the visitor according to curiosity and personal preferences.

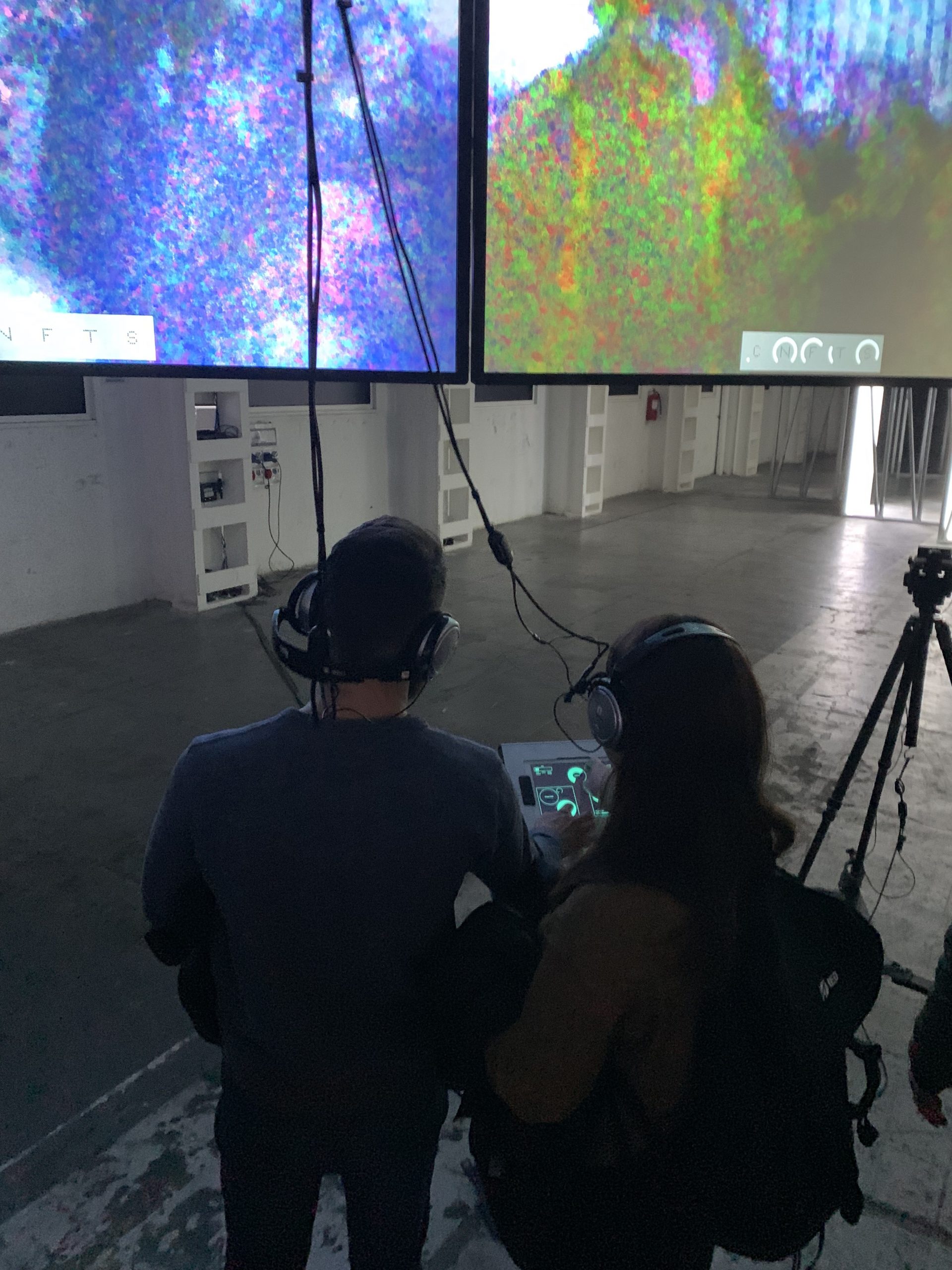

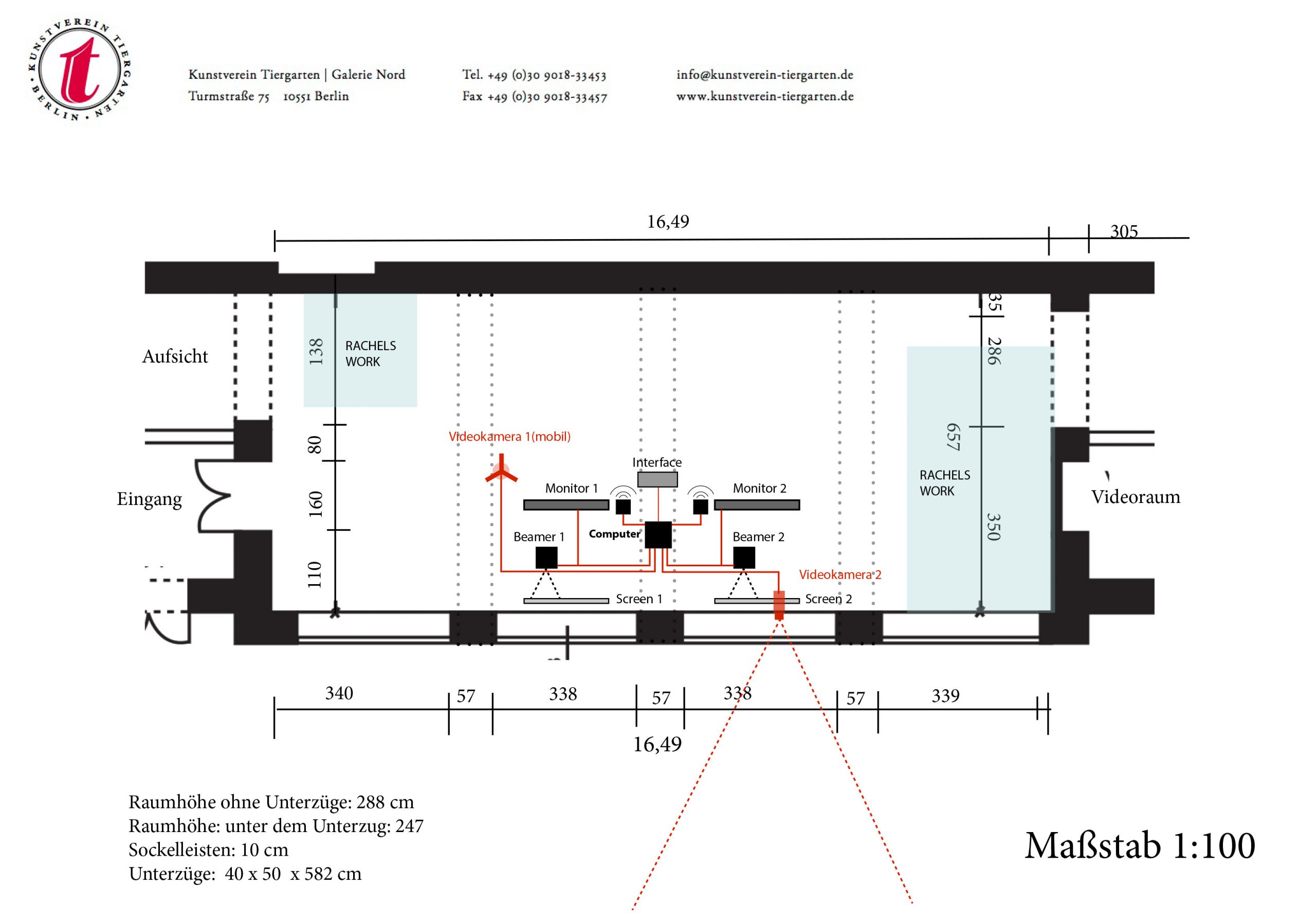

The images of Membrane are derived from a static video camera observing a street scene in Berlin. A second camera is positioned in the exhibition space and can be moved around at will. Two screens are showning both scenes in realtime.

In my earlier artistic experiments within this context we considered each pixel of a video data stream as an operational unit. One pixel learns from colour fragments during the running time of the programme and delivers a colour which can be considered as the sum of all colours during the running time of the camera. This simple method of memory creates something fundamentally new: a recording of patterns of movement at a certain location.

On a technical level, Membrane not only controls pixels or clear cut details of an image, but image ‘features’ which are learnt, remembered and reassembled. With regards to the example of colour: we choose features but their characteristics are delegated to an algorithm. TGANs (Temporal Generative Adversarial Nets) implement ‘unsupervised learning’ through the opposing feedback effect of two subnetworks: a generator produces short sequences of images and a discriminator evaluates the artificially produced footage. The algorithm has been specifically designed to produce representations of uncategorised video data and – with the help of it – to produce new image sequences. (Temporal Generative Adversarial Nets).

We extend the TGAN algorithm by adding a wavelet analysis which allows us to interact with image features as opposed to only pixels from the start. Thus, our algorithm allows us to ‘invent’ images in a more radical manner than classical machine learning would allow.

In practical terms, the algorithm speculates on the the basis of its learning and develops its own, self organised temporality. However, this does not happen without an element of control: feature classes from a selected data set of videos are chosen as target values. In our case, the dataset consists of footage from street views of other cities from around the world, taken while travelling.

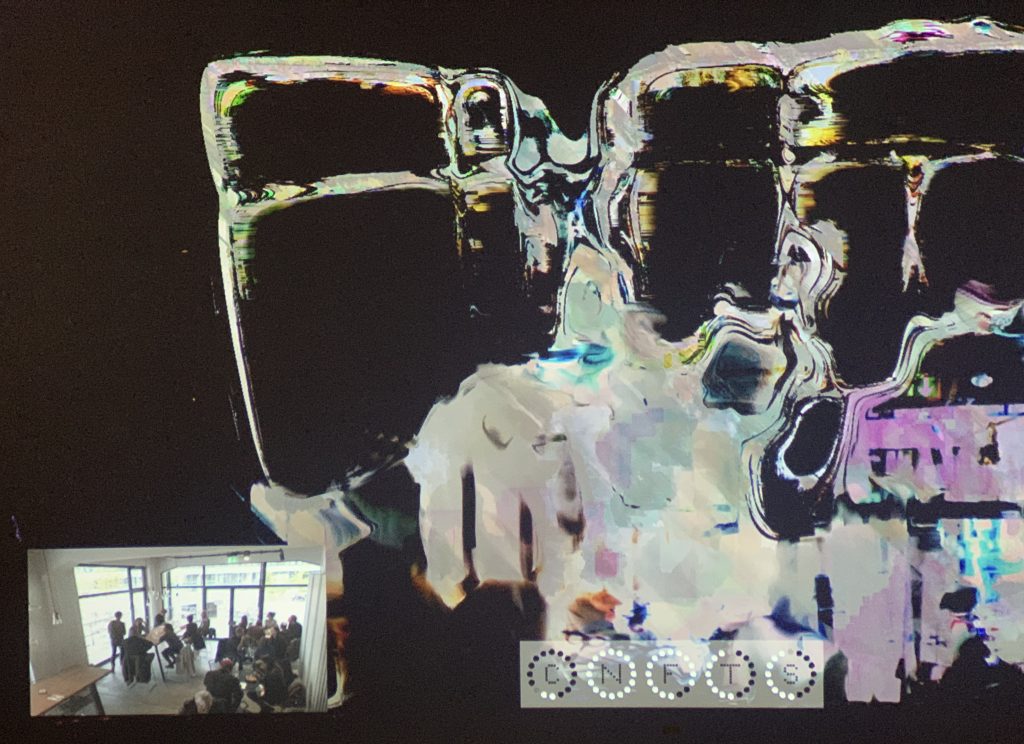

The concept behind this strategy is not to adapt our visual experience in Berlin to global urban aesthetics but rather to fathom the specificity and to invent by associations. These associations can be localised, varied and manipulated within the reference dataset. Furthermore, our modified TGAN algorithm will generate numerous possibilities to perform dynamic learning on both short and long timescales and ultimately to be controlled by the user/ visitor. The installation itself allows the manipulation of video footage from a unchanged street view to purely abstract images, based on the found features of the footage. The artwork wants to answer the question of how we want to alter realistic depictions. What are the distortions of ‘reality’ we are drawn to? Which fictions are lying behind these ‘aberrations’? Which aspects of the seen do we neglect? Where do we go with such shifts in image content and what will be the perceived experience at the centre of artistic expression?

From an artistic point of view, the question now arises, how can something original and new be created with algorithms? This is the question behind the software design of Membrane. Unlike other AI-Artworks we don’t want to identify something specific within the video footage, but rather we are interested in how people perceive the scenes. That is why our machines look at smaller, formal image elements and features which intrinsic values we want to reveal and strengthen. We want to expose the visitors to intentionally vague features: edges, lines, colours, geometrical primitives, movement. Here, instead of imitating a human way of seeing and understanding, we reveal the machine’s way to capture, interpret and manipulate visual input. Interestingly, the resulting images resemble pictorial developments of classical modernism (progressing abstraction on the basis of formal aspects) and repeat artistic styles like Pointilism, Cubism and Tachism in a uniquely unintentional way. These styles fragmented the perceived as part of the pictorial transformation into individual sensory impressions. Motifs are now becoming features of previously processed items and are successively losing their relation to reality. At the same time, we question whether these fragmentations of cognition are proceeding in an arbitrary way or whether there may be other concepts of abstraction and imagery ahead of us?

From a cultural perspective, there are two questions remaining:

– How can one take decisions within those aesthetic areas of action (parameter spaces)?

– Can the shift of the perspective from analysis to fiction help to asses our analytical procedures in a different way – understanding them as normative examples of our societal fictions serving predominantly as a self-reinforcement of present structures?

Thus unbiased artistic navigation within the excess/surplus of normative options of actions might become a warrantor for novelty and the unseen.

Programming: Peter Serocka, Leipzig

Sound: Teresa Carrasco, Bern

The work has been produced with the kind support of Kreativfonds Bauhaus Universität Weimar and HEK Basel

https://vimeo.com/349417754

my talk at the conference Re-Imagining AI

https://vimeo.com/showcase/6375722/video/366513412

Art and Science Talk LABoral Gijon our talk was starting at 1:15:00.